Hi folks,

This is a document based POC article developed in early 2017 using Pentaho open source tools. The source data is taken from unece website for demonstration purpose. It can be found at http://w3.unece.org/PXWeb/en

Disclaimer: This article is strictly not a production ready one instead an experiment. It is to be used for educational purposes only, therefore following this approach may not suitable or work in your environment and the author or reviewers are not responsible for correctness, completeness or quality of the information provided. However, re-distributing the same content in any other sites is offensive and all rights reserved.

(staging of data is prepared from downloaded Excel files and in the process of creating source data base, the data will be profiled and cleansed)

2) Populate data mart by identifying dimensions and facts from source database.

3) Populate warehouse using PDI tool using incremental data load approach.

4) Create OLAP cubes for data analysis.

5) Data visualization using report and dashboard tools.

Thank you

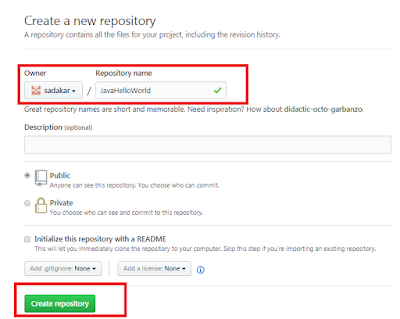

Sadakar Pochampalli

This is a document based POC article developed in early 2017 using Pentaho open source tools. The source data is taken from unece website for demonstration purpose. It can be found at http://w3.unece.org/PXWeb/en

Disclaimer: This article is strictly not a production ready one instead an experiment. It is to be used for educational purposes only, therefore following this approach may not suitable or work in your environment and the author or reviewers are not responsible for correctness, completeness or quality of the information provided. However, re-distributing the same content in any other sites is offensive and all rights reserved.

The sample architecture DWH life cycle from the POC is as follows

Tool set

ETL

|

Pentaho Data Integration (PDI)

|

pdi-ce-7.0.0.0-25

|

DWH

|

Kimball Star Schema

|

Kimball Star Schema

|

OLAP Analysis

|

Pentaho Schema Workbench(PSW)

and Saiku Analytics

|

psw-ce-3.12.0.1-196

|

BA Server

|

Pentaho BI Server

|

7

|

Reporting

|

Pentaho Report Designer(PRD)

|

prd-ce-6.1.0.1-196

|

Dashboards

|

Pentaho Ctools

|

7

|

Source of Data

|

Excel files

|

MS-Office 2016

|

Source database

|

MySQL

|

5.6.25

|

Target database

|

MySQL

|

5.6.25

|

OS

|

Windows

|

10

|

Below is the architectural approach for the POC

1)Prepare source database from downloaded Excel files(staging of data is prepared from downloaded Excel files and in the process of creating source data base, the data will be profiled and cleansed)

2) Populate data mart by identifying dimensions and facts from source database.

3) Populate warehouse using PDI tool using incremental data load approach.

4) Create OLAP cubes for data analysis.

5) Data visualization using report and dashboard tools.

Download the full document and source code here : Click Me

I hope this helps someone in community who are beginners or who are in intermediate stage in DWH.Thank you

Sadakar Pochampalli